Research and Writing

Recently Published

Tax Filing Websites Have Been Sending Users’ Financial Information to Facebook, By Simon Fondrie-Teitler, Angie Waller, and Colin Lecher, The Markup. Nov. 22, 2022

Facebook Is Receiving Sensitive Medical Information from Hospital Websites, By Todd Feathers, Simon Fondrie-Teitler, Angie Waller, and Surya Mattu, The Markup, Jun. 16, 2022.

Facebook Promised to Remove “Sensitive” Ads. Here’s What It Left Behind, By Angie Waller and Colin Lecher, The Markup, May 12, 2022.

How We Built a Meta Pixel Inspector, By Surya Mattu, Angie Waller, Simon Fondrie-Teitler, and Micha Gorelick, The Markup, Apr. 28, 2022.

Germany’s Far-Right Political Party, the AfD, Is Dominating Facebook This Election, By Angie Waller and Colin Lecher, The Markup, Sept. 22, 2021.

How We Built a Facebook Feed Viewer, By Surya Mattu, Angie Waller, Jon Keegan, and Micha Gorelick, The Markup, Mar. 11, 2021.

How We Built a Facebook Inspector, By Surya Mattu, Leon Yin, Angie Waller, and Jon Keegan, The Markup, Jan. 5, 2021.

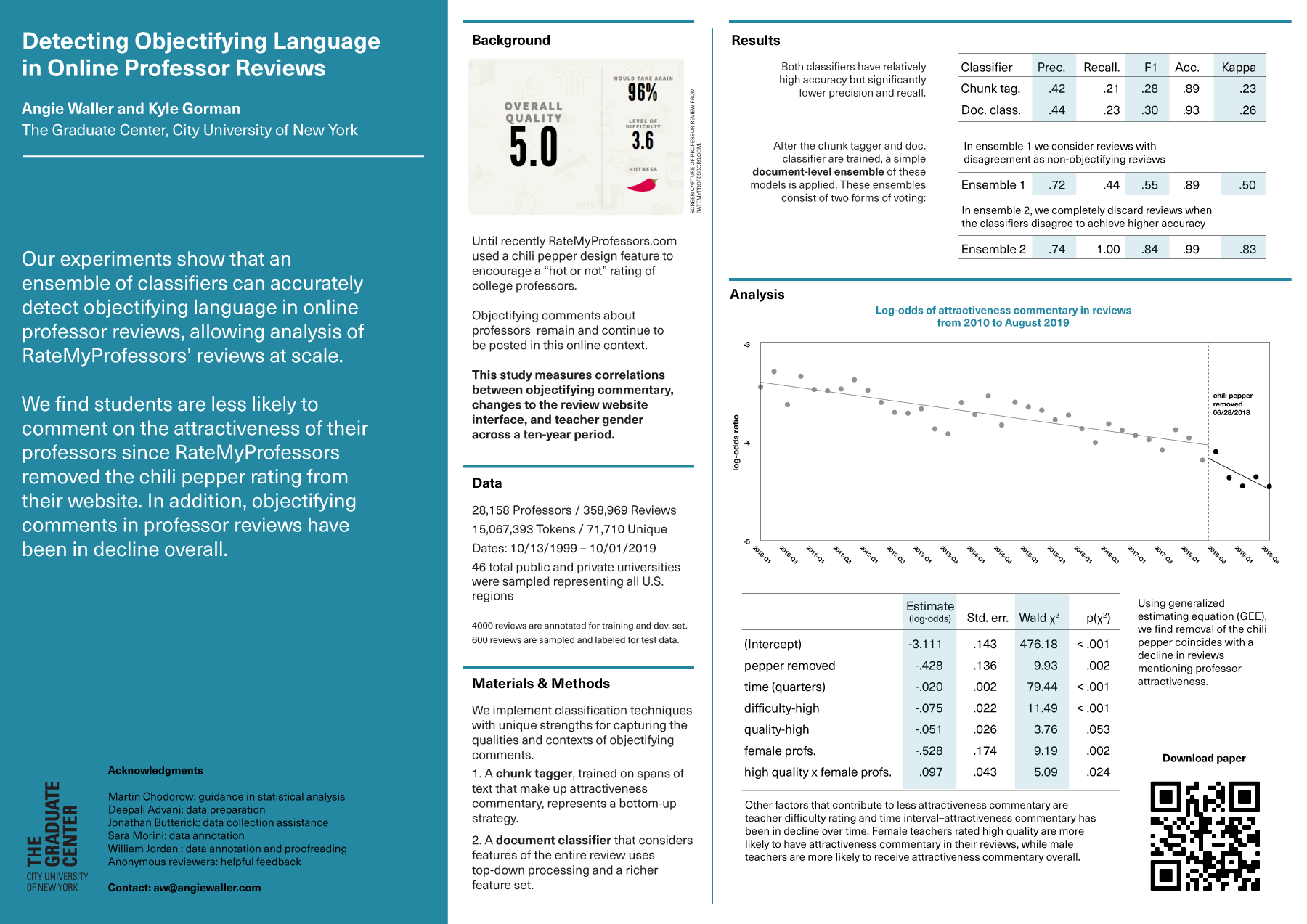

Detecting Objectifying Language in Online Professor Reviews

Abstract

Student reviews often make reference to professors’ physical appearances. Until recently RateMyProfessors.com, the website of this study’s focus, used a design feature to encourage a “hot or not” rating of college professors. In the wake of recent #MeToo and #TimesUp movements, social awareness of the inappropriateness of these reviews has grown; however, objectifying comments remain and continue to be posted in this online context. This paper describes two supervised text classifiers for detecting objectifying commentary in professor reviews. From an ensemble these classifiers, the resulting model tracks objectifying commentary at scale. Correlations between objectifying commentary, changes to the review website interface, and teacher gender are measured across a ten-year period.